1

/

of

11

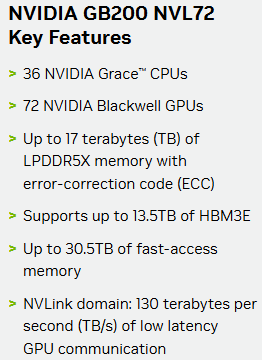

NVIDIA GB200 NVL72 - (36 Grace CPUs & 72 Blackwell GPUs)

NVIDIA GB200 NVL72 - (36 Grace CPUs & 72 Blackwell GPUs)

Out of stock

Regular price

£2,225,000.00 GBP

Regular price

£3,000,000.00 GBP

Sale price

£2,225,000.00 GBP

Unit price

/

per

All Taxes & Free Shipping applied at Checkout.

Couldn't load pickup availability

*Very High Demand! - Please contact us to check availability*

NVIDIA GB200 NVL72 - (36 Grace CPUs & 72 Blackwell GPUs)

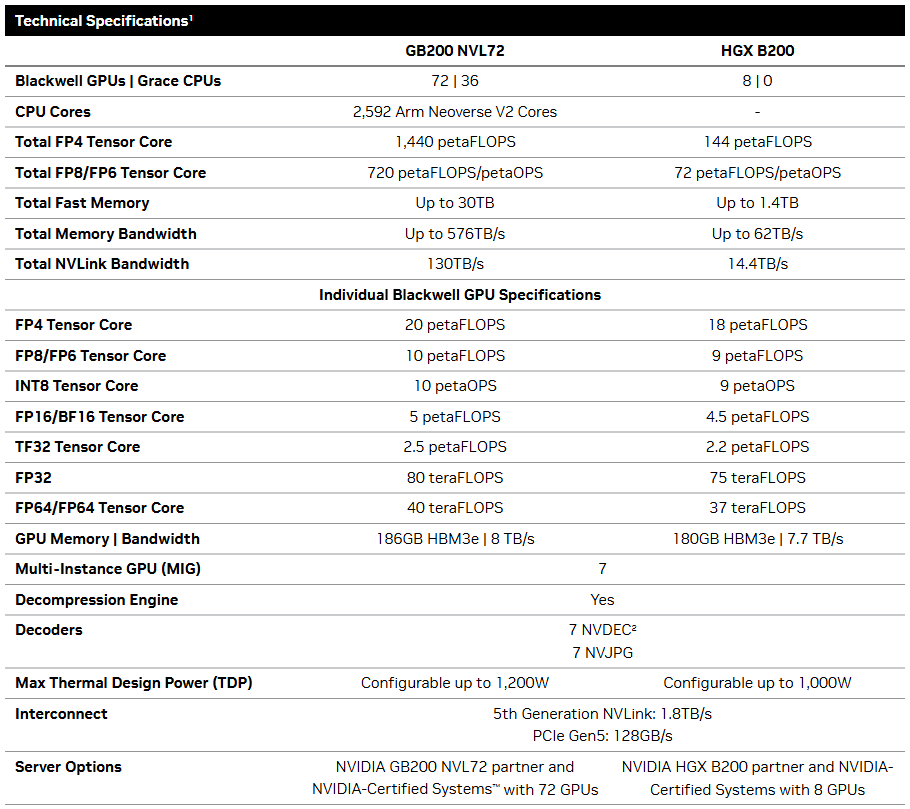

Breaking Barriers in Accelerated Computing

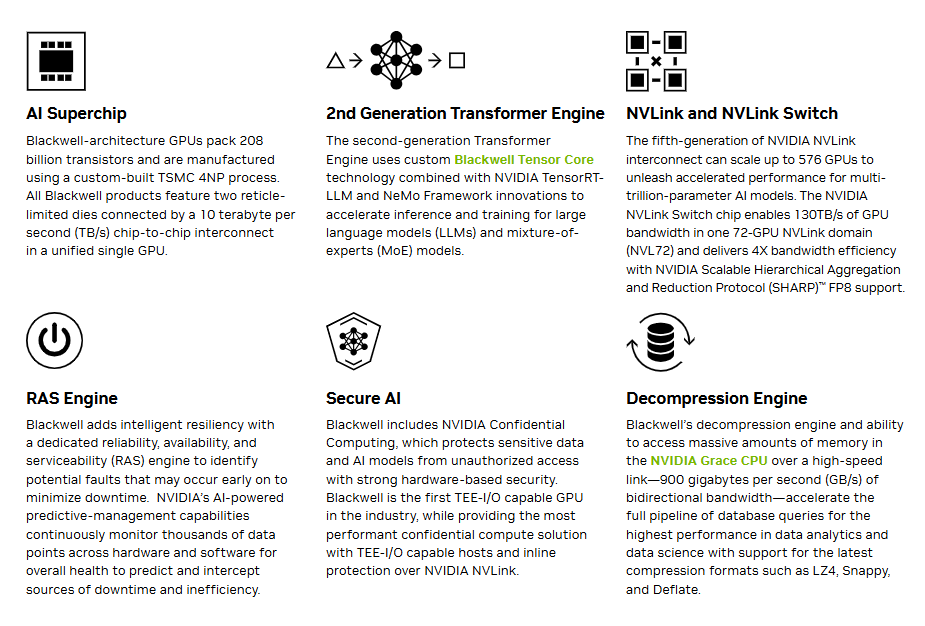

The NVIDIA Blackwell architecture introduces groundbreaking advancements

for generative AI and accelerated computing. The incorporation of the second-

generation Transformer Engine, alongside the faster and wider NVIDIA NVLink™

interconnect, propels the data center into a new era, with orders of magnitude more performance compared to the previous architecture generation. Further advances in NVIDIA Confidential Computing technology raise the level of security for real-time LLM inference at scale without performance compromise. And Blackwell’s new decompression engine combined with Spark RAPIDS™ libraries deliver unparalleled database performance to fuel data analytics applications. Blackwell’s multiple advancements build upon generations of accelerated computing technologies to define the next chapter of generative AI with unparalleled performance, efficiency, and scale.

The NVIDIA Blackwell architecture introduces groundbreaking advancements

for generative AI and accelerated computing. The incorporation of the second-

generation Transformer Engine, alongside the faster and wider NVIDIA NVLink™

interconnect, propels the data center into a new era, with orders of magnitude more performance compared to the previous architecture generation. Further advances in NVIDIA Confidential Computing technology raise the level of security for real-time LLM inference at scale without performance compromise. And Blackwell’s new decompression engine combined with Spark RAPIDS™ libraries deliver unparalleled database performance to fuel data analytics applications. Blackwell’s multiple advancements build upon generations of accelerated computing technologies to define the next chapter of generative AI with unparalleled performance, efficiency, and scale.

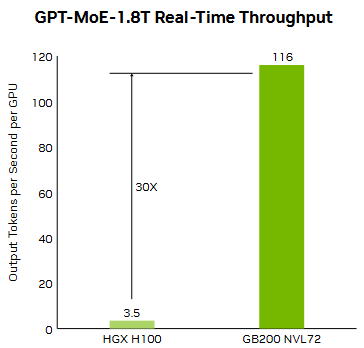

Unlocking Real-Time Trillion-Parameter Models

NVIDIA GB200 NVL72 connects 36 Grace CPUs and 72 Blackwell GPUs in an NVIDIA NVLink-connected, liquid-cooled, rack-scale design. Acting as a single, massive GPU, it delivers 30X faster real-time trillion-parameter large language model (LLM) inference. The GB200 Grace Blackwell Superchip is a key component of the NVIDIA GB200 NVL72, connecting two high-performance NVIDIA Blackwell GPUs and an NVIDIA Grace CPU with the NVLink-C2C interconnect.

NVIDIA GB200 NVL72 connects 36 Grace CPUs and 72 Blackwell GPUs in an NVIDIA NVLink-connected, liquid-cooled, rack-scale design. Acting as a single, massive GPU, it delivers 30X faster real-time trillion-parameter large language model (LLM) inference. The GB200 Grace Blackwell Superchip is a key component of the NVIDIA GB200 NVL72, connecting two high-performance NVIDIA Blackwell GPUs and an NVIDIA Grace CPU with the NVLink-C2C interconnect.

Real-Time LLM Inference

GB200 NVL72 introduces cutting-edge capabilities and a second-generation

Transformer Engine, which enables FP4 AI. This advancement is made possible

with a new generation of Tensor Cores, which introduce new microscaling formats, giving high accuracy and greater throughput. Additionally, the GB200 NVL72 uses NVLink and liquid cooling to create a single, massive 72-GPU rack that can overcome communication bottlenecks.

GB200 NVL72 introduces cutting-edge capabilities and a second-generation

Transformer Engine, which enables FP4 AI. This advancement is made possible

with a new generation of Tensor Cores, which introduce new microscaling formats, giving high accuracy and greater throughput. Additionally, the GB200 NVL72 uses NVLink and liquid cooling to create a single, massive 72-GPU rack that can overcome communication bottlenecks.

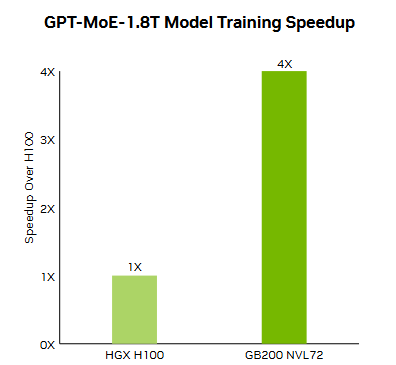

Massive-Scale Training

GB200 NVL72 includes a faster second-generation Transformer Engine featuring 8-bit floating point (FP8) precision, which enables a remarkable 4X faster training for large language models at scale. This breakthrough is complemented by the fifth-generation NVLink, which provides 1.8 terabytes per second (TB/s) of GPU-to-GPU interconnect, InfiniBand networking, and NVIDIA Magnum IO™ software.

GB200 NVL72 includes a faster second-generation Transformer Engine featuring 8-bit floating point (FP8) precision, which enables a remarkable 4X faster training for large language models at scale. This breakthrough is complemented by the fifth-generation NVLink, which provides 1.8 terabytes per second (TB/s) of GPU-to-GPU interconnect, InfiniBand networking, and NVIDIA Magnum IO™ software.

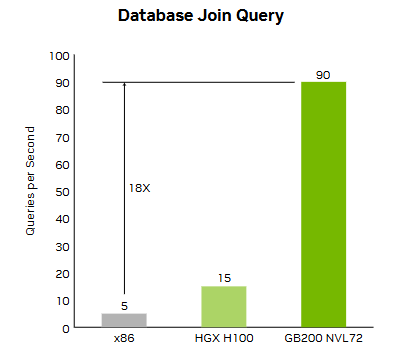

Data Processing

Databases play critical roles in handling, processing, and analyzing large volumes of data for enterprises. GB200 NVL72 takes advantage of the high-bandwidth-memory performance, NVLink-C2C, and dedicated decompression engines in the NVIDIA Blackwell architecture to speed up key database queries by 18X compared to CPU, delivering a 5X better TCO.

Databases play critical roles in handling, processing, and analyzing large volumes of data for enterprises. GB200 NVL72 takes advantage of the high-bandwidth-memory performance, NVLink-C2C, and dedicated decompression engines in the NVIDIA Blackwell architecture to speed up key database queries by 18X compared to CPU, delivering a 5X better TCO.

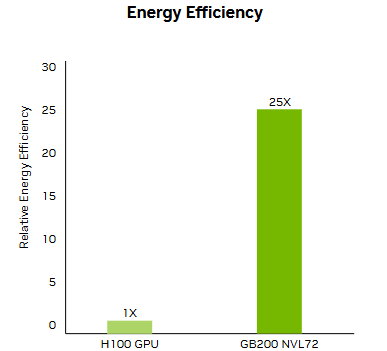

Energy-Efficient Infrastructure

Liquid-cooled GB200 NVL72 racks reduce a data center’s carbon footprint and energy consumption. Liquid cooling increases compute density, reduces the amount of floor space used, and facilitates high-bandwidth, low-latency GPU communication with large NVLink domain architectures. Compared to the NVIDIA H100 air-cooled infrastructure, GB200 NVL72 delivers 25X more performance at the same power while reducing water consumption.

Liquid-cooled GB200 NVL72 racks reduce a data center’s carbon footprint and energy consumption. Liquid cooling increases compute density, reduces the amount of floor space used, and facilitates high-bandwidth, low-latency GPU communication with large NVLink domain architectures. Compared to the NVIDIA H100 air-cooled infrastructure, GB200 NVL72 delivers 25X more performance at the same power while reducing water consumption.

Product features

Product features

Materials and care

Materials and care

Merchandising tips

Merchandising tips

Share