1

/

of

9

NVIDIA HGX B200 - (8 Blackwell GPUs)

NVIDIA HGX B200 - (8 Blackwell GPUs)

Out of stock

Regular price

£270,000.00 GBP

Regular price

£380,000.00 GBP

Sale price

£270,000.00 GBP

Unit price

/

per

All Taxes & Free Shipping applied at Checkout.

Couldn't load pickup availability

*Very High Demand! - Please contact us to check availability*

NVIDIA HGX B200 - (8 Blackwell GPUs)

Propelling the Data Center Into a New Era of Accelerated Computing

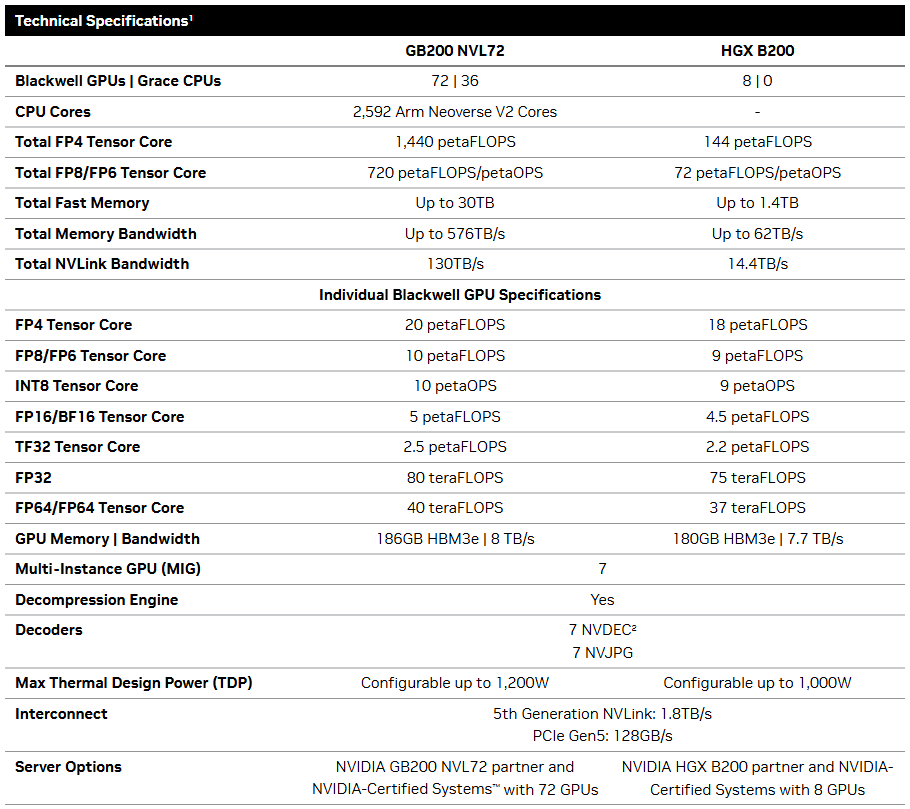

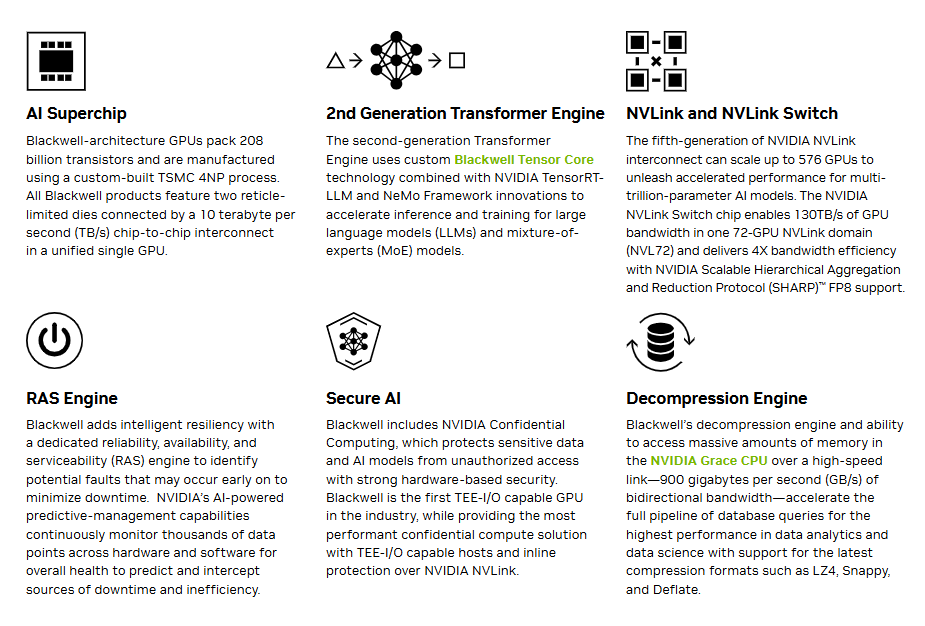

The NVIDIA HGX™ B200 propels the data center into a new era of accelerating

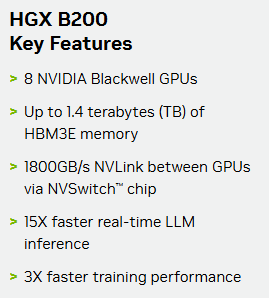

computing and generative AI, integrating NVIDIA Blackwell GPUs with a high-speed interconnect to accelerate AI performance at scale. As a premier accelerated scaleup x86 platform with up to 15X faster real-time inference performance, 12X lower cost, and 12X less energy use, HGX B200 is designed for the most demanding AI, data analytics, and high-performance computing (HPC) workloads.

computing and generative AI, integrating NVIDIA Blackwell GPUs with a high-speed interconnect to accelerate AI performance at scale. As a premier accelerated scaleup x86 platform with up to 15X faster real-time inference performance, 12X lower cost, and 12X less energy use, HGX B200 is designed for the most demanding AI, data analytics, and high-performance computing (HPC) workloads.

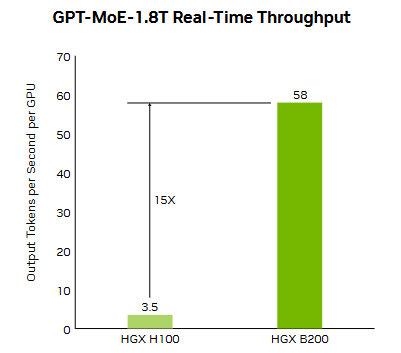

Real-Time Inference for the Next Generation of Large Language Models

HGX B200 achieves up to 15X higher inference performance over the previous NVIDIA Hopper™ generation for massive models such as GPT MoE 1.8T. The second-generation Transformer Engine uses custom Blackwell Tensor Core technology combined with TensorRT™-LLM and NVIDIA NeMo™ framework innovations to accelerate inference for LLMs and mixture-of-experts (MoE) models.

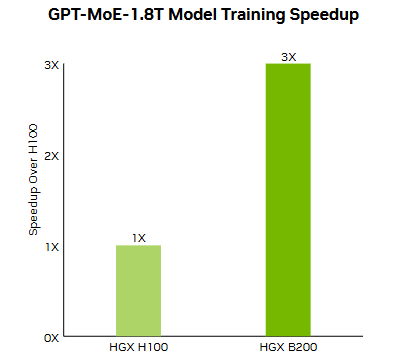

Next-Level Training Performance

The second-generation Transformer Engine, featuring FP8 and new precisions,

enables a remarkable 3X faster training for large language models like GPT MoE

1.8T. This breakthrough is complemented by fifth-generation NVLink with 1.8TB/s of GPU-to-GPU interconnect, NVSwitch chip, InfiniBand networking, and NVIDIA Magnum IO software. Together, these ensure efficient scalability for enterprises and extensive GPU computing clusters.

The second-generation Transformer Engine, featuring FP8 and new precisions,

enables a remarkable 3X faster training for large language models like GPT MoE

1.8T. This breakthrough is complemented by fifth-generation NVLink with 1.8TB/s of GPU-to-GPU interconnect, NVSwitch chip, InfiniBand networking, and NVIDIA Magnum IO software. Together, these ensure efficient scalability for enterprises and extensive GPU computing clusters.

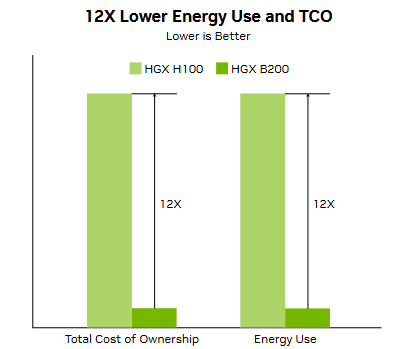

Sustainable Computing

By adopting sustainable computing practices, data centers can lower their carbon footprints and energy consumption while improving their bottom line. The goal of sustainable computing can be realized with efficiency gains using accelerated computing with HGX. For LLM inference performance, HGX B200 improves energy efficiency by 12X and lowers costs by 12X compared to the Hopper generation.

By adopting sustainable computing practices, data centers can lower their carbon footprints and energy consumption while improving their bottom line. The goal of sustainable computing can be realized with efficiency gains using accelerated computing with HGX. For LLM inference performance, HGX B200 improves energy efficiency by 12X and lowers costs by 12X compared to the Hopper generation.

Product features

Product features

Materials and care

Materials and care

Merchandising tips

Merchandising tips

Share